Redefining the Universal Interface Layer

At the Royal College of Art and with design lab Moon, I’ve conducted experimental design research exploring how AI integration can fundamentally reshape OS interfaces, moving beyond app-centric paradigms to unlock fluid, intent-driven interactions. The work investigates how visual composition can serve as a universal language for communicating with AI systems while preserving human creative agency.

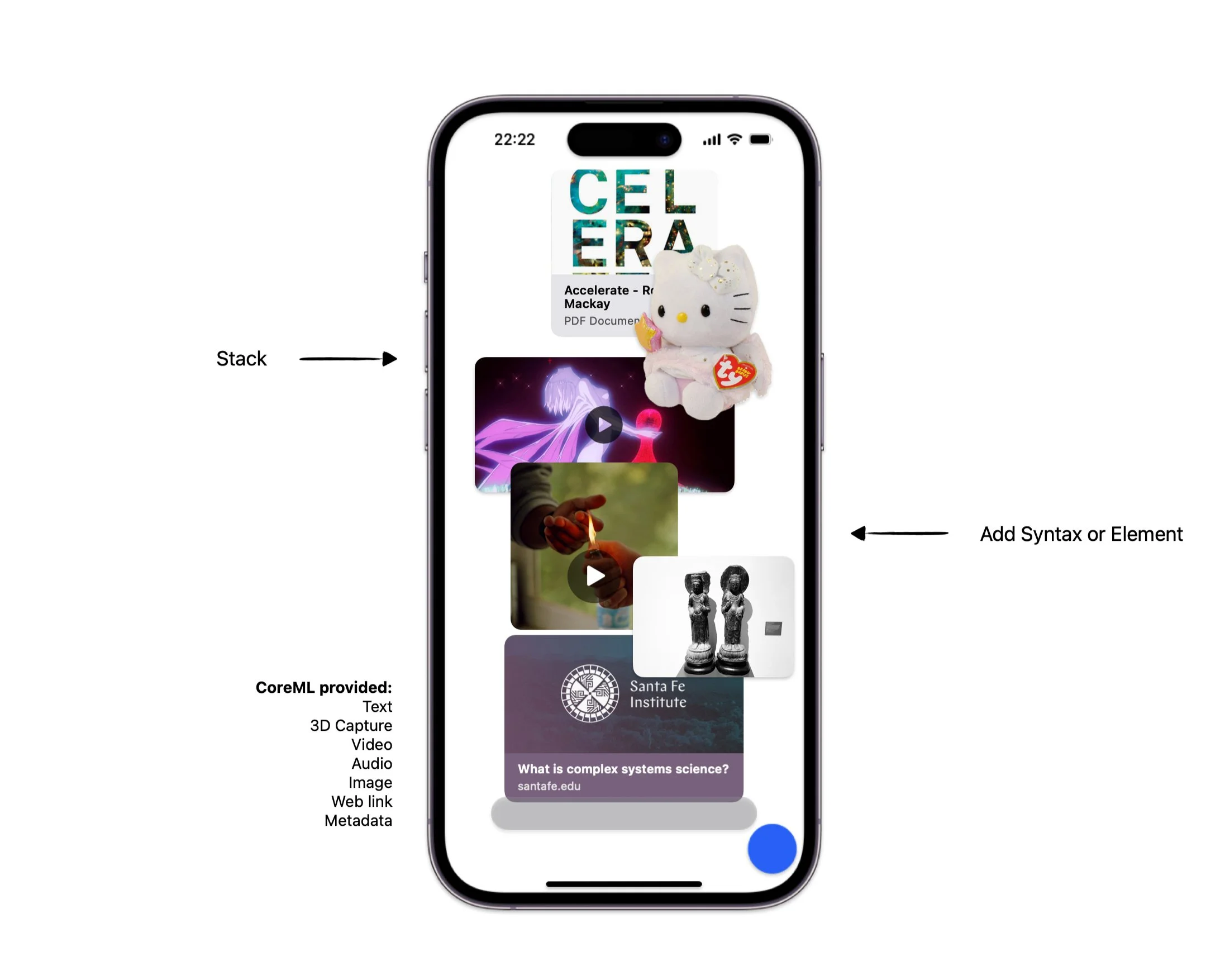

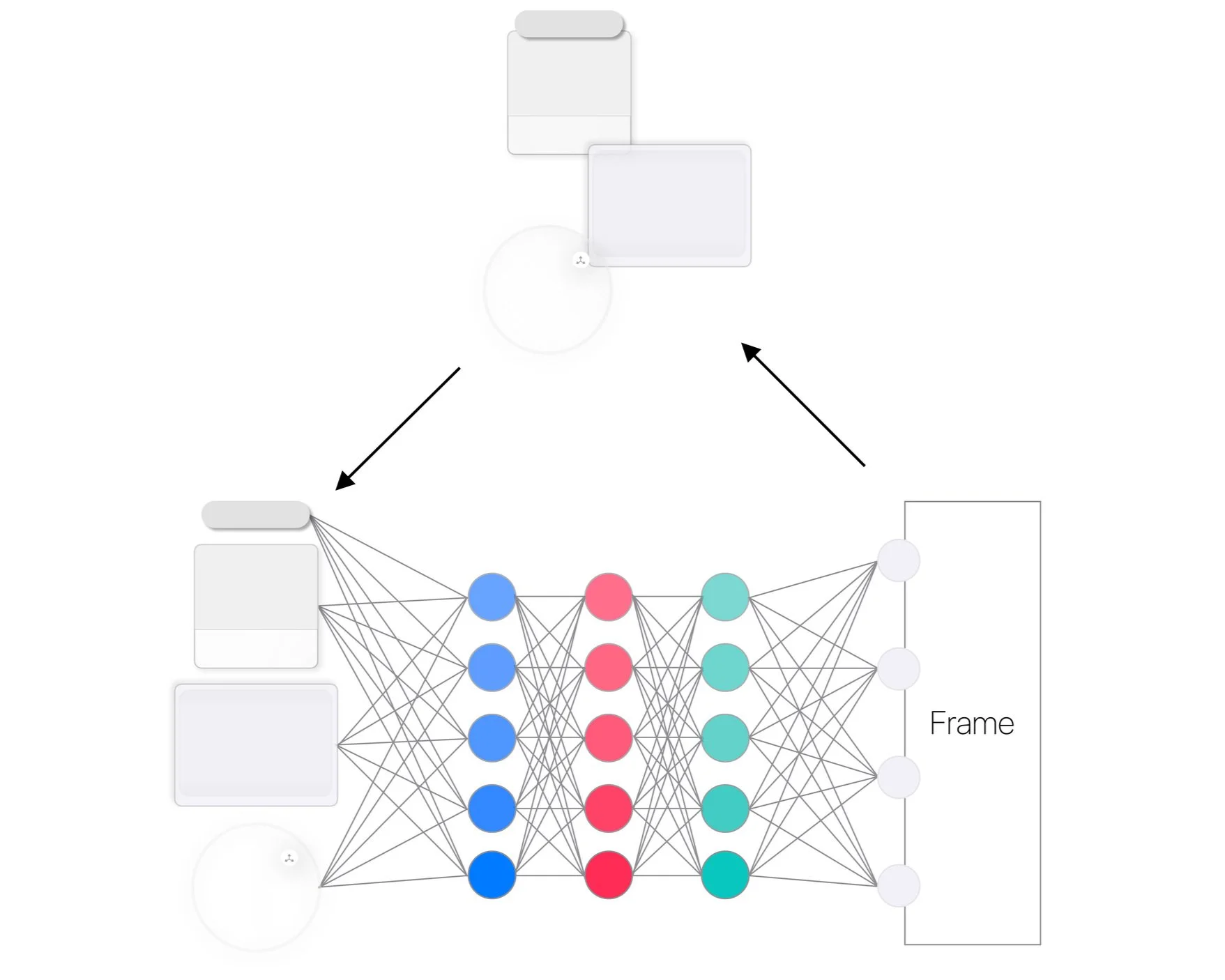

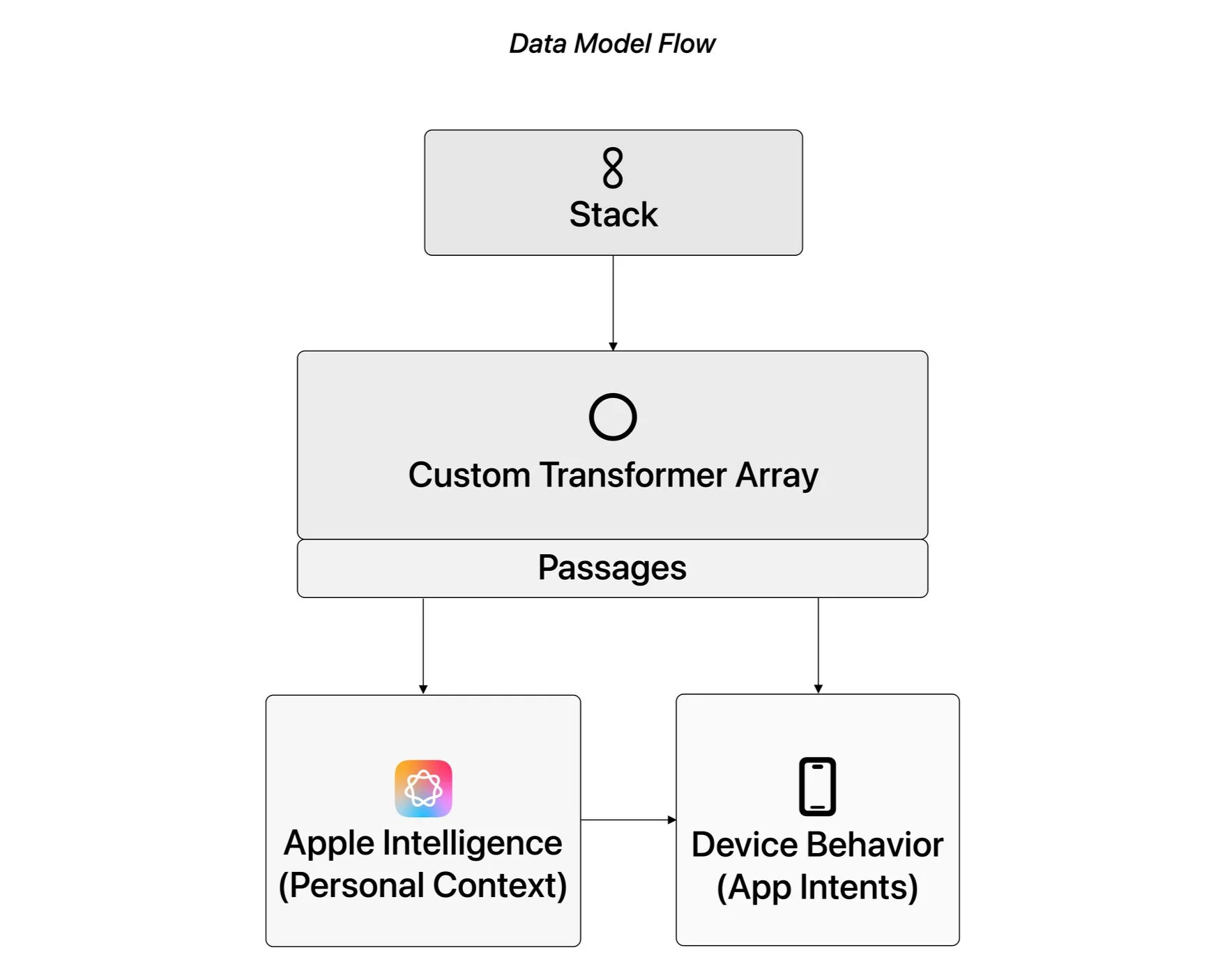

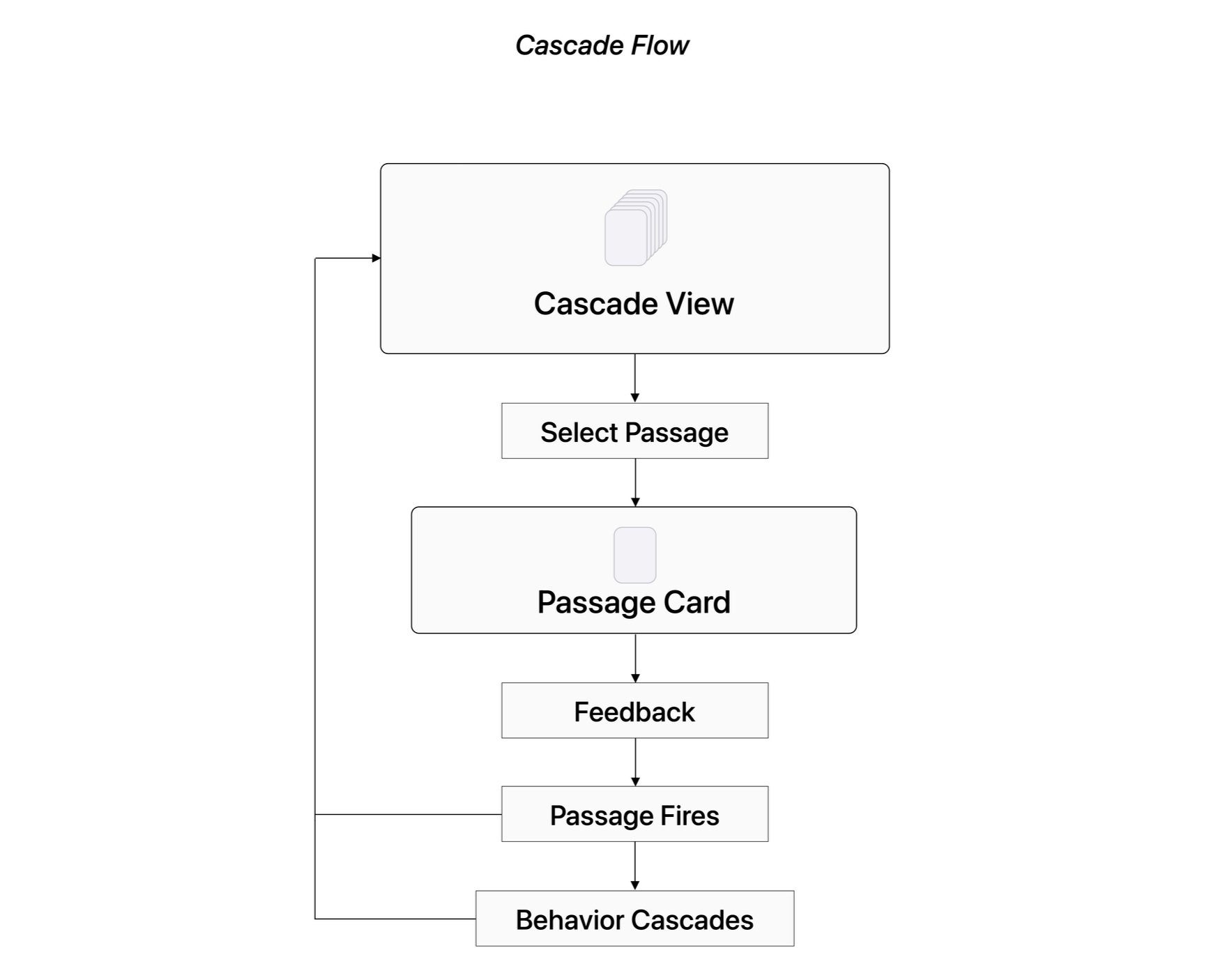

I developed a framework for using visual composition as a rich, contextual language for AI interaction, orders of magnitude more expressive than isolated text commands. The Stack/Cascade model enables non-linear, multi-threaded AI collaboration while maintaining human oversight and creative control. The hybrid system architecture balances on-device privacy with cloud-based processing power, leveraging Apple's CoreML and App Intents frameworks toward a future vision for Apple Intelligence.

The research positions AI not as a replacement for human creativity but as a collaborative medium that amplifies human intent. By creating interfaces that understand context, sequence, and aesthetic relationships, we enable users to work at higher levels of abstraction while maintaining granular control. This work has direct implications for future iOS and macOS interfaces and beyond suggesting pathways for deeper AI integration that feel natural and empowering rather than overwhelming. The research demonstrates how compositional interfaces can make advanced AI capabilities accessible to non-technical users while preserving the creative decision-making process.